In our model, the arrival of data packets from the network is an asynchronous

event, and therefore, we cannot directly probe the source code for

measuring the software overhead associated to the reception event.

Consequently, we have to rely on indirect probing. Observe that without

the support of programmable network co-processor, dispatching of arrived

packets has to be carried out by the receiver host processor. This

is because of the protection reason, moving data across address spaces

should be handled by privilege process only. As the host processor

is engaged during message reception, other execution flows would be

affected. Based on this observation, consider if we know about the

expected execution time of a particular computation segment. And on

a particular run of this computation segment, there is message reception

happened in the background, we would expect to observe a remarkable

increase in execution time. Thence, we have devised a technique to

indirectly measure the software overhead associated to any message

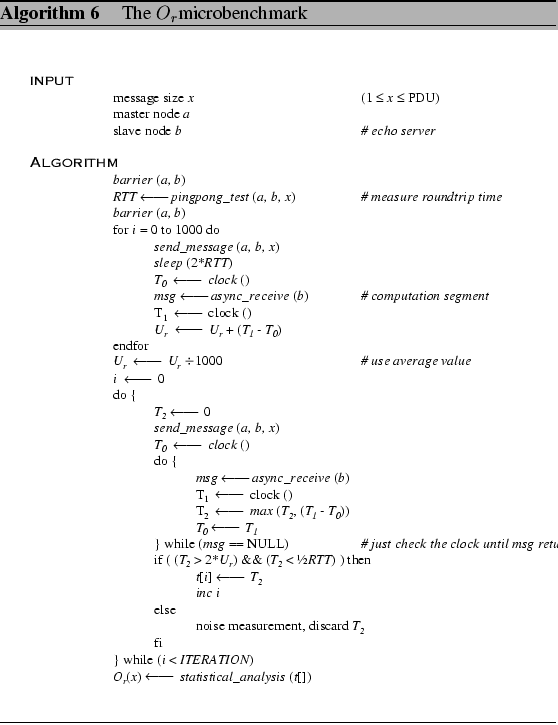

reception. In summary, Algorithm 6 presents the pseudocode

for our ![]() microbenchmark.

microbenchmark.

Our technique to measure ![]() is quite straightforward. As

we need to detect whether a message (packet) has arrived, we have

to poll for the data arrival. Thus, we use the polling action to be

the computation segment. However, if the software overhead associated

to this receive call is too large, we should devise other lightweight

computation segment. First, we have to measure the expected execution

time of this computation segment, as well as the round trip time for

current message size. In our pseudocode, we just take the average

of these measurements, but we can adopt stringent statistical test

to get more accurate value. Then we run another pingpong-like test

to obtain a set of

is quite straightforward. As

we need to detect whether a message (packet) has arrived, we have

to poll for the data arrival. Thus, we use the polling action to be

the computation segment. However, if the software overhead associated

to this receive call is too large, we should devise other lightweight

computation segment. First, we have to measure the expected execution

time of this computation segment, as well as the round trip time for

current message size. In our pseudocode, we just take the average

of these measurements, but we can adopt stringent statistical test

to get more accurate value. Then we run another pingpong-like test

to obtain a set of ![]() measurements. After the master node

sends out one message, it immediately evaluates how long has the host

processor been engaged in polling for message arrival. It records

the maximum time spent in each run until it receives a message. To

filter out noise measurements, we use our baseline measurements to

select possible candidates. This pingpong-like process is running

for many times until we obtain a sufficient large sample size. Then

the collected dataset is processed by some statistical routine to

extract the information we need.

measurements. After the master node

sends out one message, it immediately evaluates how long has the host

processor been engaged in polling for message arrival. It records

the maximum time spent in each run until it receives a message. To

filter out noise measurements, we use our baseline measurements to

select possible candidates. This pingpong-like process is running

for many times until we obtain a sufficient large sample size. Then

the collected dataset is processed by some statistical routine to

extract the information we need.